AI generated content and copyright

June 13, 2024 •Sushmitha Venkatesh

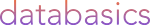

We have recently written articles on AI in digital asset management (DAM) and use of LLMs in DAM. As artificial intelligence (AI) and Large Language Models (LLMs) continue to advance, their implications for copyright law have become a significant focus of legal discussions worldwide.

In Australia, the integration of AI technologies in content creation and management raises complex questions about copyright ownership, infringement, and fair use. LLMs, which can generate human-like text and analyse vast amounts of data, challenge traditional notions of authorship and intellectual property rights. This introductory exploration delves into how AI and LLMs intersect with copyright law in Australia, highlighting the evolving legal landscape and the need for regulatory frameworks that balance innovation with the protection of creators' rights.

What about the copyright?

Integration of AI technologies in content creation brings about a crucial discussion surrounding copyright laws. With the rise of AI-generated content, there are growing concerns about infringement and claims of obtaining content through improper channels. As a result, copyright laws are undergoing significant shifts to address these challenges and ensure fair use and attribution in this evolving landscape. Let's dive deeper into this multifaceted realm where AI and human collaboration are reshaping not only work dynamics but also the legal frameworks governing creative output.

How will the recent changes made by ACC (Australian Copyright Council) affect your day-to-day practice with AI generated content?

The difference between a computer-generated work and a computer-assisted work lies in the level of human involvement. How does that work?

- In these works, the computer algorithm or program is responsible for generating the content entirely on its own, often based on predefined rules, algorithms, or machine learning models. In legal terms, the copyright for computer-generated works is typically attributed to the person or entity that owns or controls the computer program responsible for creating the work.

- A computer-assisted work involves human creativity and decision-making throughout the creative process, with the computer serving as a tool to assist or enhance human creativity. In legal terms, the copyright for computer-assisted works is typically attributed to the human creators who made creative decisions during the creation process, even though computers were used as tools.

Who owns the rights to a computer-generated work?

Making arrangements for the creation of a computer-generated work can involve various legal, ethical, and practical issues like Ownership and Attribution.

Determining who owns the rights to a computer-generated work can be complex. In traditional creative processes, the creator of the work typically holds the copyright. However, with computer-generated works, the situation may be less clear, especially if the work is generated by an AI system. Legal frameworks may need to evolve to address questions of ownership and attribution in these cases.

Should one disclose that work is computer-generated?

Well, it is indeed complex and multifaceted.

Arguments in Favour of Disclosure:

- Transparency and Authenticity:

Some argue that disclosure of computer-generated works promotes transparency and ensures that consumers and audiences are aware of the origin of the content they are consuming. This transparency is particularly important in contexts where the distinction between human-created and computer-generated content may influence perception or valuation.

- Consumer Protection:

Providing disclosure of computer-generated works can be viewed as a form of consumer protection, ensuring that consumers are not misled or deceived about the nature of the content they are consuming.

- Legal Clarity:

Some argue that requiring disclosure of computer-generated works can provide legal clarity and guidance, helping to address potential copyright issues, ownership disputes, and attribution concerns that may arise from the use of automated or algorithmic content creation techniques.

Arguments Against Disclosure:

- Practical Challenges:

Implementing a requirement for disclosure of computer-generated works may pose practical challenges, particularly in cases where the distinction between human-created and computer-generated content is not clear-cut. Defining clear criteria for what constitutes a computer-generated work and enforcing disclosure requirements could be complex and subjective.

- Freedom of Expression:

Artists should have the flexibility to choose their preferred tools and techniques without being compelled to disclose specific details about their creative process.

- More than 27 high profile Authors + the Authors Guild (+ a class action) have sued Microsoft and a range of Open AI entities.

- A claim is made against each entity based on its role in training of ChatGPT.

- 206. OpenAI unlawfully and wilfully copied the Grisham Infringed Works and used them to “train” OpenAI’s LLMs without Grisham’s permission.

- Andersen v. Stability AI Ltd., 3:23-cv-00201- The claim alleges violations of the Digital Millennium Copyright Act and the US right of publicity = the right of an artist to their name and aspects of their identity.

- Another case related copy of visual images- Getty Images (US), Inc.v. Stability AI, Inc., 1:23-cv-00135-JLH

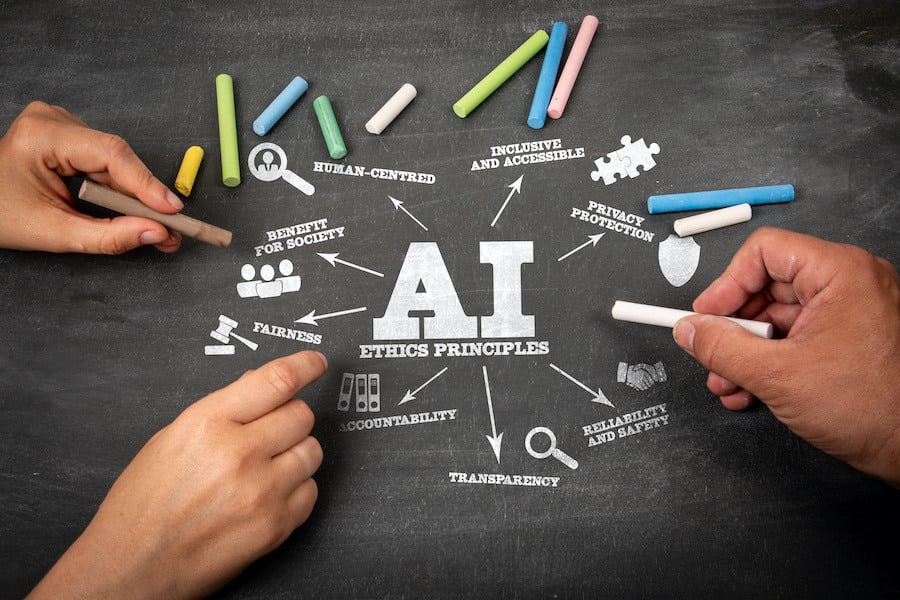

Does generative AI violate copyright laws?

It depends — generative AI may violate copyright laws when the program has access to a copyright owner's works and is generating outputs that are "substantially similar" to the copyright owner's existing works, according to the Congressional Research Service.

However, there is no federal legal consensus for determining substantial similarity.

Well, check out some Policy developments:

Australian Policy developments:

The paper records issues with the greatest significance as:

- the use of inputs and data to train AI models

- the potential for improved transparency in the use of copyright materials by AI

- the use of AI to create imitative works and/or outputs otherwise infringing copyright, and

- the copyright status of AI outputs

The paper proposes that the government establish a "standing mechanism for ongoing engagement" to:

- Develop a shared understanding across stakeholder groups of the policy problems and legal uncertainties.

- Provide feedback to Government on any policies or reforms developed in response.

- Have terms of reference that don't duplicate other bodies!

- The Government announced on 5 December that it would be establishing a copyright and artificial Intelligence expert group.

UK Policy developments:

In the chapter on copyright the committee recommends:

- The Government should publish its view on whether copyright law provides sufficient protections to rightsholders, given recent advances in LLMs. If this identifies major uncertainty the Government should set out options for updating legislation to ensure copyright principles remain future proof and technologically neutral.

- The IPO [Intellectual Property Office] code must ensure creators carefully empowered to exercise their rights, whether on an opt-in or opt-out basis. Developers should make it clear whether their web crawlers are being used to acquire data for generative AI training or for other purposes. This would help rightsholders make informed decisions and reduce risks of large firms exploiting adjacent market dominance.

- The Government should encourage good practice by working with licensing agencies and data repository owners to create expanded, high quality data sources at the scales needed for LLM training. The Government should also use its procurement market to encourage good practice.

- The IPO code should include a mechanism for rights holders to check training data. This would provide assurance about the level of compliance with copyright law.

Canadian Policy developments:

- Digital Charter Implementation Act 2022

- A framework for development and see of artificial intelligence.

- The framework includes “outlining clear criminal prohibitions and penalties regarding the use of data obtained unlawfully for AI development or where the reckless deployment of AI poses serious harm and where there is fraudulent intent to cause substantial economic loss through its deployment”

The Future of AI Copyright

If generative AI models continue to go unchecked, many experts in this space believe it could spell big trouble — not only for the human creators themselves, but the technology too.

“When these AI models start to hurt the very people who generate the data that it feeds on — the artists — it’s destroying its own future”. “So really, when you think about it, it is in the best interest of AI models and model creators to help preserve these industries. So that there is a sustainable cycle of creativity and improvement for the models.”

In the U.S., much of this preservation will be incumbent on the courts, where creators and companies are duking it out right now. Looking ahead, the level at which U.S. courts protect and measure human-made inputs in generative AI models could be reminiscent of what we’ve seen globally, particularly in other Western nations.

The United Kingdom is one of only a handful of countries to offer copyright protection for works generated solely by a computer. The European Union, which has a much more pre-emptive approach to legislation than the U.S., is in the process of drafting a sweeping AI Act that will address a lot of the concerns with generative AI.

Can you copyright your LLM prompts?

The answer is “No”, since it isn’t considered to be the work of human creation.

It has long been the posture of the U.S. Copyright Office that there is no copyright protection for works created by non-humans, including machines. Therefore, the product of a generative AI model cannot be copyrighted.

However, legally these AI systems — including image generators, AI music generators and chatbots like ChatGPT — cannot be considered the author of the material they produce. Their outputs are simply a culmination of human-made work, much of which has been scraped from the internet and is copyright-protected in one way or another.

“One thing you have to know about copyright law is, for infringement of one thing only — it could be a text, an image, a song — you can ask the court for $150,000. So imagine the people who are scraping millions and millions of works.” - Daniel Gervais, Professor at Vanderbilt Law school.